▎光刻製程模擬與優化

AI-based Simulation and Optimization of Lithography Process

►國立清華大學 林嘉文教授 / Prof Chia-Wen Lin, National Tsing Hua University

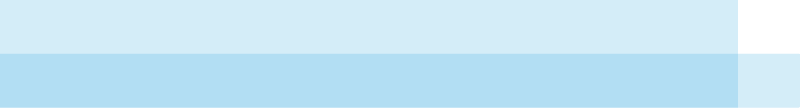

本亮點技術為和聯華電子合作成果,特色如下:第一,全世界第一套以電腦視覺準確地預測光刻製程對晶圓線路所產生的失真,速度超越現有商業軟體約2~3個數量級;第二,本技術可修正佈局圖光罩樣型,補償光刻製程產生之失真;第三,本模型可篩選出新穎而無法準確估測的電路佈局圖樣,進而精進模型。此技術由於大幅超前目前EDA設計工具,可望造成半導體製程EDA之典範轉移。部分成果已發表於IEEE T-CAD,並獲得全國最大的 VLSI Design/CAD 2020 研討會最佳論文獎。

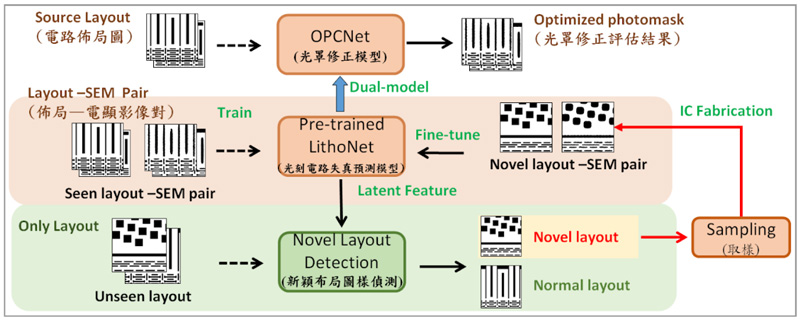

A collaboration outcome with UMC, this is the world’s first set of vision-based EDA tools for predicting the circuit shape distortions due to lithography and etch, photomask optimization, and layout novelty detection. Given different training ADI/AEI images, this

technology can learn to predict the effects of photolithograph and etch processes, so as to predict the shape distortions of metal layers of IC products as well as predict the optimal photomask patterns to compensate for the shape distortions. Our model can also detect novel layout patterns to update the model by active learning. Also, our learning- based technology can be easily extended by collecting suitable layout-SEM image pairs of different fabrication configurations. Hence, it sets a new milestone and breakthrough for EDA tool development. Part of our inventions, has been published in IEEE T-CAD (May 2021) and won the best paper award presented by VLSI/CAD 2020.

Reference :

▲ 本亮點技術所開發的半導體製程EDA工具,包含半導體光刻製程電路失真預估模型、自動光罩圖樣修正模型、與新穎佈局圖樣檢測模型。

▲ 利用深度學習模型,本技術可快速地預測IC光刻製程所產生的電路失真及光罩修正,並應用於IC製程之佈局圖評估、IC瑕疵及熱區預測,及光罩優化等。

此研究歸屬科技部 AI 專案計畫執行成果,詳細資訊請參考附錄之計畫總表第 20 項

For the name of the project which output this research, please refer to project serial no. 20 on the List of MOST AI projects on Appendix

▎臺大醫神精準健康管理平台

NTU Medical Genie Precision Health Platform

►國立臺灣大學 賴飛羆教授/ Prof. Fei-Pei Lai, National Taiwan University

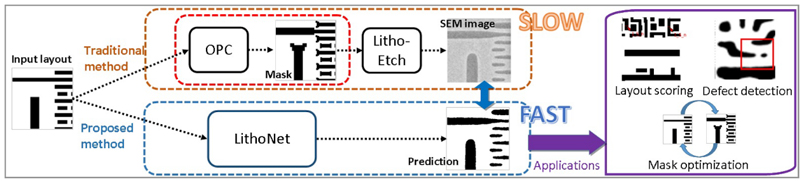

精準醫療旨在透過大量數據收集與分析電子病歷、基因、生活型態與生活環境資料,針對不同個案自身環境與疾病狀況發展個人化照護,其中生活型態與環境因子影響劇甚,臺大醫神精準健康管理平台透過整合穿戴式裝置、IoT環境感測器、深度學習技術建立疾病預測模型,除提供全天候即時監測輔助醫療照護人員決策外,也解決長久以來無法有效收集病人出院後疾病風險資訊問題。

臺大醫神精準健康管理平台包含以下優點:

- 即時監控、異常主動通知、落實精準健康管理。

- 連續性監測生理資訊與居家環境資訊,給予個人化生活型態建議。

- 兼容多廠牌穿戴式裝置(Garmin, Fitbit, Apple) 數據即時同步。

- 慢性阻塞性肺疾病急性發作預測模型準確度已達93.5%。

- 恐慌症急性發作預測模型準確度達81.3%。

The platform is mainly composed of wearable devices, IoT environmental sensors, deep learning, personal health App and case management backend. It can collect and monitor user’s lifestyle and environment data automatically, and predict the possibility of emergency to assist medical staff in making decisions. It is also believed that the platform can provide solutions to collecting and monitoring disease risk information for patients after they are discharged from hospitals. Advantages of NTU Medical Genie (https://ntu-med-god.ml/):

1. Continuous real-time monitoring of lifestyle and environment data.

2. Compatible with multiple wearable devices

(Garmin, Fitbit, Apple).

3. AECOPD module to predict early

exacerbations of chronic diseases using lifestyle, environment factors and medical records. 93.5% on accuracy for the task of predicting whether a patient will suffer an acute exacerbation within the next seven days.

4. NTU Medical Genie (iOS/Android) App provides modular symptom recording function, acute attack prediction function and personalized lifestyle advice for patients with chronic diseases. Currently, this App is available in official stores in Taiwan and Japan.

5. Remind medical providers the necessity of immediate care and reduce the possibility of declines in patient’s health status.

▲ NTU Medical Genie Platform

▲ NTU Medical Genie App

此研究歸屬科技部 AI 專案計畫執行成果,詳細資訊請參考附錄之計畫總表第 28 項

For the name of the project which output this research, please refer to project serial no. 28 on the List of MOST AI projects on Appendix

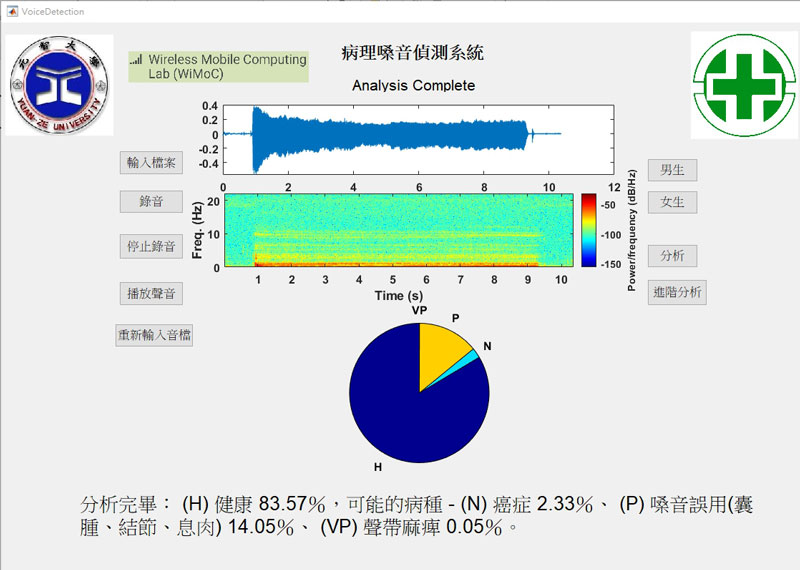

▎基於多模態學習模型的新世代聲紋把脈技術

Next Generation Diagnosis of Acoustic Pathology Using Ensemble Learning Models

►元智大學 方士豪教授/ Prof. Shih-Hau Fang, Yuan Ze University

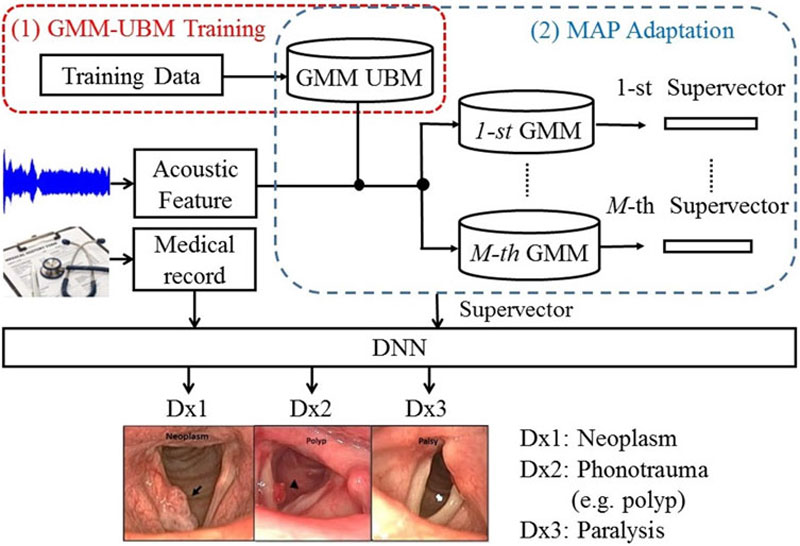

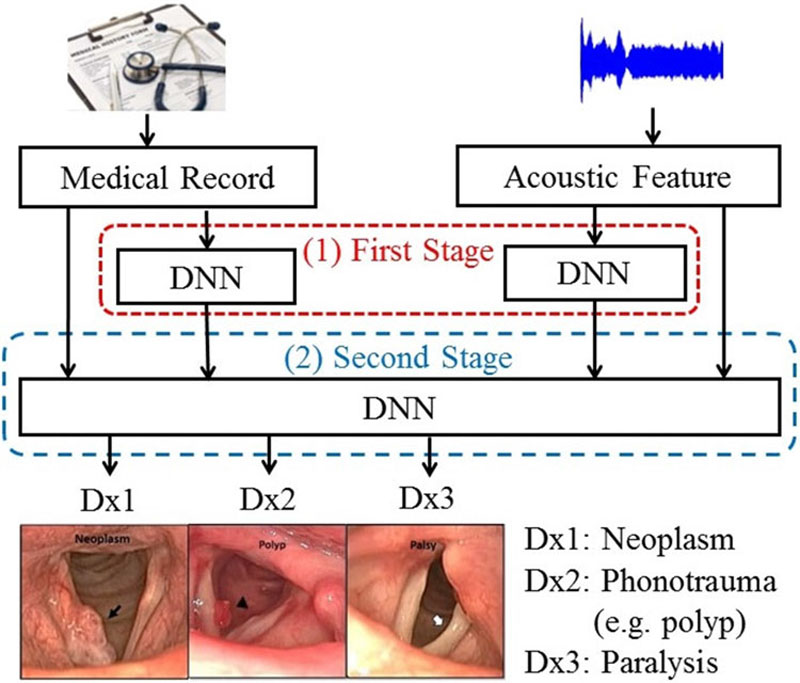

本團隊基於亞東醫院之嗓音資料庫,提出多模態聲紋把脈演算法瞭解發音人聲帶健康。發表的全球第一篇以深度學習偵測嗓音疾病之技術,獲得國際期刊Journal of Voice三年內最高被引用次數之殊榮。更詳細分析個案人口學特徵、臨床症候等資訊,將偵測異常進一步區分三類臨床上常見疾病如嗓音良性病灶、腫瘤、與聲帶麻痺等,正確率已居全球領先地位。同時,本團隊於2018-2019年國際會議IEEE Big Data 舉辦國際競賽,提升台灣該領域之國際能見度與影響力,並獲2019國家新創獎。

Based on the FEMH database, wherein thousands speech were collected by the speech clinic of a hospital from 2012 to 2019, our research group proposed a next generation diagnosis of acoustic pathology using ensemble learning models. We published the first paper exploiting AI for disorders detection in 2019. This paper wins the most cited articles published in Journal of Voice since 2018. The proposed multi-modal approaches integrate demographic features and clinical symptoms results to achieve classification between phonotraumatic lesions, glottic neoplasm, and vocal palsy with an accuracy of 87.26%, which is the best performance in the literature and wins the National Innovation Award in 2019. Our team hosted the Voice Disorder Detection Competition on 2018-2019 IEEE Big Data, which was the first voice disorder competition in international conference.

More than 100 teams from 27 different countries participated in our competition and thus, increasing the international research visibility of Taiwan.

Reference :

Most Cited Journal of Voice Articles

2019 國家新創獎

IEEE Big data FEMH Voice Disorder Detection Competition

▲ ELSEVIER 網頁與多模態混合模型架構圖 The ELSEVIER web for Journal of Voice

and the proposed model.

▲ 國家新創獎與嗓音偵測系統介面

The National Innovation Award and GUI of the proposed system.

此研究歸屬科技部 AI 專案計畫執行成果,詳細資訊請參考附錄之計畫總表第 15 項

For the name of the project which output this research, please refer to project serial no. 15 on the List of MOST AI projects on Appendix

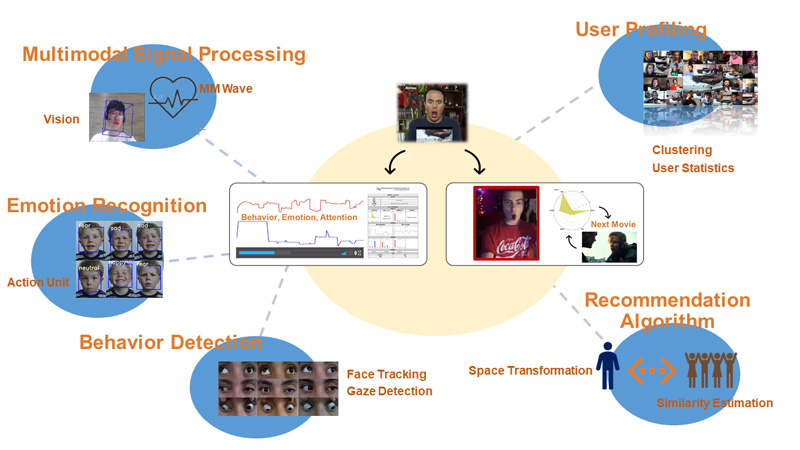

▎多模態之多媒體推薦系統

Multimedia Recommendation using Viewer Affective Behavior Responses

►國立清華大學 洪樂文教授/ Prof. Y.-W. Peter Hong, National Tsing-Hua University

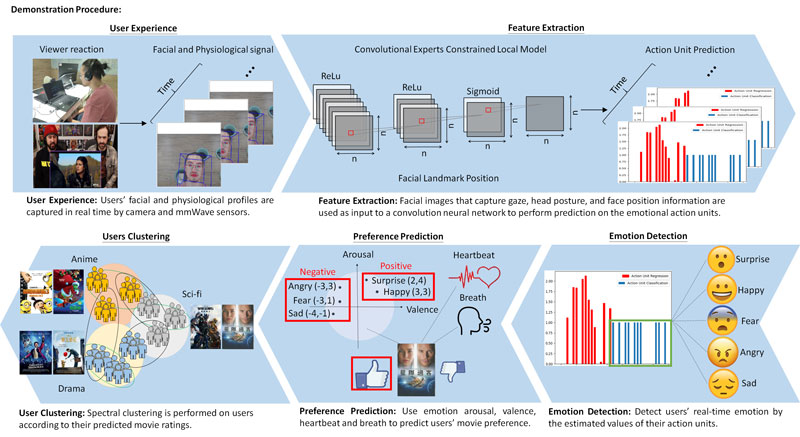

在傳統上,影音推薦系統只能透過觀看者的主動評價及影片的類型來猜測觀看者的喜好,並進行影片的推薦。然而,卻不知道觀看者喜歡哪一段影片、為何喜歡及觀看的情緒為何。本團隊提出了一個結合情緒反應辨識和非接觸式生理行為建模技術的個人化推薦系統驗證平台。我們使用結構化的人群用戶反饋、視覺與語音內容和用戶的多模態反應來學習一個電影和社交友人的智能推薦模型。透過結合情感和行為模塊,此推薦系統能夠達到準確、個人化和非侵入式的多媒體內容和社交友人的推薦。此平台為洪樂文教授、方士豪教授、王奕翔教授、李祈均教授及其團隊所共同開發,並於去年科技部跨域交流觀摩暨成果發表會上展示。

Traditionally, recommender systems rely on the users’ ratings along with the genre of the movies to infer user preferences but are incapable of computing the viewers’ true underlying emotion towards the movies. For this reason, we present a next generation personalized recommendation system

integrating emotional reaction recognition and contactless physiological behavioral modeling technologies. We use structured crowd user feedback, audio-visual contents, and multimodal reaction to learn intelligent recommender models. The movie contents and facial reactions are modeled using a deep intra-genre projection network. By incorporating both emotion and behavioral modules, our next generation recommendation system can predict users’ preferences toward multimedia contents in an accurate, personalized, non- obtrusive manner. The platform is jointly developed by the research groups of Prof. Y.-W. Peter Hong, Prof. Shi-Hau Fang, Prof. I-Hsiang Wang, and Prof. Chi-Chun Lee. The demo system was exhibited at MOST Artificial Intelligence interdisciplinary workshop last year.

Reference :

- 臺灣瘋 AI ! AI 技術研發實力,打造 AI 生態系:https://reurl.cc/Q6dW00

▲此圖為系統示意圖,藉由分析(左手邊)用戶觀看影片過程中的情緒反應以及生理訊號,如呼吸及心跳,來推薦(右手邊的)可促動用戶情緒的電影以及具相似情緒反應的友人。

The system analyzes the user’s emotional reactions and physiological signals during the viewing process, and recommends movies with similar emotional components and friends with similar reactions to movies.

▲ 此圖為系統流程圖,首先系統會透過深度學習的模型去抽取觀看影片過程中的人臉特徵 ( 如:臉部位置、眼睛視線等 ) 以及生理訊號,並由此進行情緒辨識,利用和資料庫的其他使用者進行比對,進而找到當前使用者對影片的喜好程度,以及有相同愛好的人群。

The system first extracts deep features from the users’ reactions and performs emotion recognition using both visual and physiological signals. The action units associated with the emotional responses are used as the feature to predict users’ movie and social preferences.

此研究歸屬科技部 AI 專案計畫執行成果,詳細資訊請參考附錄之計畫總表第 13 項

For the name of the project which output this research, please refer to project serial no. 13 on the List of MOST AI projects on Appendix

▎延展實境互動式遠距看房服務

Extended Reality Interactive Virtual Tour

►國立臺灣大學 吳沛遠助理教授/ Assistant Prof. Pei-Yuan Wu, National Taiwan University

台大團隊與矽谷新創公司Tourmato合作開發全景影像卷積層類神經網路,並以此為基礎開發全景視覺偵測技術,並搭配機器人以提供房屋買賣方遠距虛擬即時看房服務。為因應屋況影像資料所可能衍生之隱私議題,合作開發全景影像人臉去識別技術,並於2020年消費者電子展(CES)展示。

NTU and Silicon Valley start-up Tourmato collaborate in developing deep neural network suitable for 360 videos, as well as panorama saliency detection techniques. Incorporating robotics solutions, we provide remote virtual

tour service that allows customers at home to see, learn and interact with remote places and local folks by personal mobile devices. In view of potential privacy concerns pertaining to panorama data, NTU and Tourmato also collaborate to develop 360 video face de-identification techniques, which was demonstrated in consumer electronics show (CES) 2020.

Reference :

▲ 臺大團隊與新創公司 Tourmato 合作開發遠距實況虛擬看房服務,並參與 CES2020 展出。

NTU and Silicon Valley start-up Tourmato collaborate in developing virtual tour services.

The jointly-developed techniques are also demonstrated in CES 2020.

此研究歸屬科技部 AI 專案計畫執行成果,詳細資訊請參考附錄之計畫總表第 18 項

For the name of the project which output this research, please refer to project serial no. 18 on the List of MOST AI projects on Appendix