【活動訊息轉發】歡迎踴躍報名「臺灣AI展望與布局記者會暨跨部會交流座談會」(活動時間:2023/03/28)

急診來診病患具高度不確定性及時效壓力:無法預知來診時間、病患疾病種類、特殊事件導致急診快速湧入大量病患等狀況。因此,於病患看診流程之各階段導入人工智慧輔助診斷處置,可維持一致的看診品質、減低醫護人員負擔、有效縮短病患留院時間、提高急診處理量能。本院積極推動運用人工智慧科技,打造智慧醫療場域以提高醫療品質,由急診醫學部與台灣大學人工智慧技術暨全幅健康照護聯合研究中心(以下簡稱台大AI中心)藉由執行國科會Capstone拔尖整合計畫“智慧急診:以人工智慧改善急診病人流動及解決壅塞之全面性策略”建立智慧急診創新醫療流程,並實際於臺大醫院急診完成近萬人次初步驗證,急診流程全面智慧化,在六個急診流程關鍵,成功開發13個AI模型,改善急診壅塞問題。

本計畫由台灣大學陳銘憲前副校長與廖婉君副校長,帶領台灣大學陳信希教授、傅立成教授、陳祝嵩教授、與王偉仲教授等技術團隊,以及由本院吳明賢院長,急診醫學部黃建華主任帶領近十位急診主治醫師,組成堅強跨領域團隊共同規畫進行。

建置算力與數據平台,急診流程全面智慧化

整合本院各科部室 (急診部、資訊室、醫研部、智醫中心等)、台灣大學AI中心多個研究團隊、業界夥伴 (華碩雲端、研華、商之器科技、維曙智能科技等) 投入人力、設備、軟體等,協力建置本院急診私有雲基礎算力、數據平台、以及串接院內數百項即時多模態病歷資料,開發即時AI輔助系統。目前於本院內所建置的急診私有雲平台,其中有六台專屬GPU與CPU伺服主機,區分為兩個高可用叢集 (HA Clusters),以提供AI模型訓練與推論的算力資源,並能支應急診24小時穩定運作需求。智慧急診流程所需之各項服務、AI模型、數據管理平台均在此私有雲平台運行。此平台整合數據介接傳輸、模型部署更新、運算資源分配、數據分析圖像化顯示介面等功能;平台上也同時運行數項臨床服務系統,包括AI輔助系統、急診中控台即時看板、病床管理系統、病床與設備定位系統等。

六個急診流程關鍵,成功開發13個AI模型

在急診看診流程中,選擇六個關鍵,開發對應的AI模型用以輔助醫師進行診療。模型開發階段需先向院內申請回溯性資料,並輔以國外的開放醫療資料進行訓練,多項模型效能達到世界最佳 (SOTA,state-of-the-art),並累積發表近30篇國際期刊與會議論文。成功開發13個適用於急診的AI模型。分別運用在急診檢傷、醫師問診、胸部X光檢查、管路誤置偵測、院內心跳停止預後建議、留觀離部評估、早期高危險偵測預警各階段,於病患留院期間,隨時根據當下狀況提供最新AI輔助建議:

一、 快速精確的電子化檢傷:用於預測檢傷等級、住院機率、留院時間,輔助醫師進行有效準確的檢傷分級分流。

二、 快速精準的病史分析:依據病人病史預測潛在癥狀與診斷的ICD code,提醒醫師可能的病因與處置。

三、 即時危險分級及辨識:對躺床病患以平躺胸部X光影像進行即時判斷,同時偵測是否感染肺結核、偵測有無氣胸癥狀與位置、及偵測是否有導管誤置情況發生。

四、 及早及適當安全的離部:預測三日再回診可能性、留觀期間死亡機率預測、住院可能性預測、停留超過 24 小時預測、相似病歷取回機制,輔助醫師評估病患離院風險。

五、 心跳停止事件的預後評估:本團隊獨創開發之技術,由腦部CT影像自動偵測與計算腦部灰白質比率,輔助醫師快速且精確評估病患後續治療規劃。

六、 病患手環早期高危示警:急診病患藉由戴上智慧型手環,隨時進行生理訊號監看,由模型偵測30~60分鐘後高危險狀況發生機率並即時提出警告,臨床醫護人員可提早介入處置。

保護病患隱私,進行臨床前瞻性試驗,導入多院區落地試用

研究團隊與業界夥伴依據本院急診使用需求,在急診私有雲平台上,以一年半時間共同開發出智慧急診AI輔助系統,介接數百個即時病歷項目欄位與醫療影像,以每15分鐘頻率更新每位急診病患狀態與AI建議,並能同時追蹤多院區至少五百名以上急診病患。藉由使用AI輔助系統,醫師能隨時掌握負責的病患狀況,如同有位AI助理隨時提醒,不僅減輕醫師負擔也達到一致的醫療品質。

為確認臨床效益,並兼顧病患隱私,整個急診創新流程撰寫嚴謹的IRB計畫書經台大醫院倫委會審核同意,所有病歷與影像資料以即時去識別化的方式進行臨床試驗,已有近萬名案例進行驗證。為使各地區均能運用創新智慧醫療工具,本院規畫於今年2月起陸續在雲林分院及新竹分院導入試用。不僅能平衡城鄉醫療資源差距,也能依據不同區域看診需求,持續收集資料並進行調整優化。

急診現場智慧化,圖像式管理急診人流、病床及設備

透過院內定位系統,準確定位總院急診場域內一百多張病床與數百項設備;嘗試調整臨床流程,使用病床管理系統以掌握病床位置與使用狀態 (如空床、使用中、待清床、維護中等)。急診醫師與護理師可使用診間電腦,或行動式平板手機,透過連接急診中控台即時看板頁面快速掌握現場病患與資源的全面狀況,以快速因應緊急狀況。

國科會補助,發展智慧急診,儲備研究動能

導入人工智慧技術,需要投入相當多的資源與人力。藉由三年來國科會支持與各方投入協助,已奠定本院智慧急診的基礎,並將繼續導入創新流程於各院區進行試用。這個智慧急診創新醫療流程可說是本院啟動智慧醫療最好的範例,為持續加速轉動這個創新循環,除了擴大應用持續營運優化之外,也將本院過去十年的急診病歷資料,清理建置為“急診特色資料庫”,交由醫學研究部管理,開放供本院未來醫學研究使用。這是臺大醫院在推動智慧醫療的里程碑,也是下一階段的起點,未來將持續在智慧醫療的領域,為守護全民的健康持續努力。

相關報導

https://www.chinatimes.com/realtimenews/20230220002229-260405?chdtv

【活動訊息轉發】歡迎報名參加專題演講(2023/01/03)

https://docs.google.com/forms/d/e/1FAIpQLSfAqyWSfc1ru9AGMQHzrtDLYmxBejbIi12ukmAPv2jdkUNZeQ/viewform

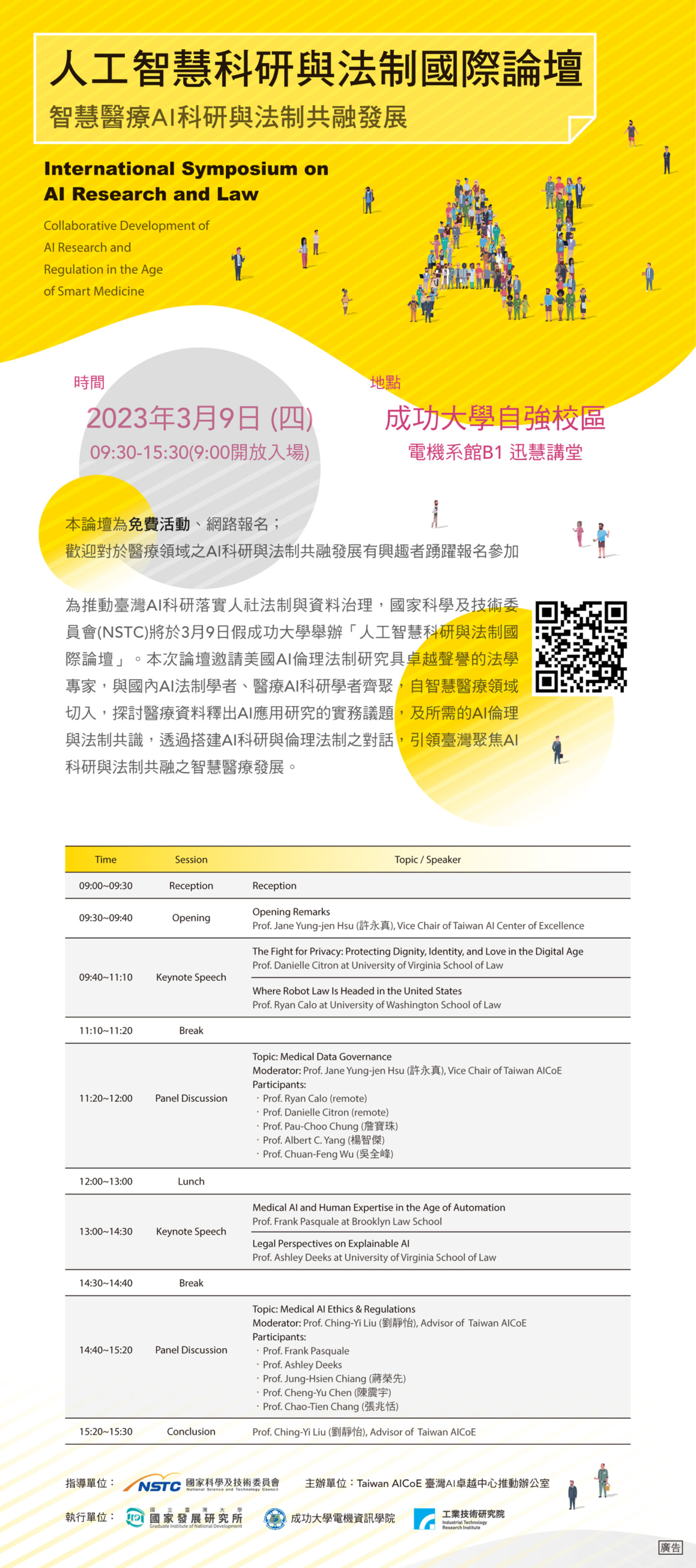

誠摯的邀請您參加「人工智慧倫理與法律國際論壇」(2022/12/21)

此次論壇邀請來自美國及澳洲的國際法學專家,以及台灣AI及法學相關專業人士與會,

共同探討如何因應AI人工智慧在日益蓬勃發展下,對當前社會倫理與法律的衝擊挑戰,

交流歐美國家先進的AI治理經驗。

活動資訊:

如有任何問題,請洽Taiwan AICoE 推動辦公室,莊小姐。02-2511 3318 分機411

![]() 地點:台北南港展覽館一館

地點:台北南港展覽館一館

【活動宣傳】人工智慧加速器 (AI Accelerators) 短期課程

2022 年 3 月 8 至 3 月 29 日

哈佛大學計算機科學與電機工程 William H. Gates 講座教授 孔祥重教授

●線上報名:https://neti.cc/L2QLpea

●相關資訊詳見:https://aiacademy.tw/ht-class/

緣起:

近十年來,全球頂尖科技公司為提高資料處理速度和效率,研發了不少人工智慧加速器 (AI Accelerators) 專用晶片。這些加速器正在拓展人工智慧應用,並重新定義計算平台,在此同時人工智慧加速器相關架構及軟硬體也持續快速演化改變中,相關研究與從業人士應該善用此一趨勢迎接變革。此課程將協助台灣的工程師、研究人員和研究生了解這個新計算時代的大局,以及相關的技術機會和挑戰。

主辦單位:

台灣人工智慧學校基金會、科技部人工智慧製造系統(AIMS)研究中心、中央研究院資訊科技創新研究中心

聯絡人:(02)8512-3731 #12 康小姐

課程時間:

2022 年 3 月 8 日至 3 月 29 日,每週二與週四。

日期: 3/8,3/10,3/15,3/17,3/22,3/24,3/29。

晚間 18:30 至 20:30。共計 14 小時。

地點:

國立清華大學(新竹市光復路二段101號) 第四綜合大樓綜四館 R224

地圖: https://edu.tcfst.org.tw/map_nthuold.htm

招生對象:

(1) 電機、電子、資訊、半導體及其他相關科系碩博士生 (須經指導教授同意,並請於報名時提供指導教授之姓名與聯絡電話)。

(2) 已應用AI技術從事研究之教職及研究人員。

(3) 半導體研發、IC Design及應用 AI 技術產業從業人員。

入學條件:

(1) 需要具備下列的基本知識 :

(A) 機器學習基礎知識(如卷積神經網絡)。

(B) 具計算機基本架構概念(例如 CPU、GPU、內存層次結構)和對晶片系統 (SoC) 加速器有基本認識。

(C) 能運用大學程度的線性代數和統計學。

(2) 將就相關工作經歷及實際需要進行審核,以決定學員名單。

錄取人數:

80 名 (得不足額錄取),預計一半名額保留給相關產業從業人員。

學費標準:

(1) 碩博士生、從事 AI 研究教職及研究人員,免收學費。

(2) 產業從業人員每位 24,000元。

本課程收費除支付場地、行政、助教鐘點費用等課程所需支出外,將由主辦單位用於發展『應用人工智慧加速器(AI Accelerators)』之個案研究教材。

課程說明:

本課程介紹了人工智慧加速器的原理。將有六次兩小時的課程,第七次需要進行學員報告與課程總結討論,涵蓋以下主題:

(1) Accelerators for deep neural networks, strategies

(2) Parallelizing neural network computations, minimizing memory accesses and data transfers

(3) Model compression with quantization and pruning, low-bitwidth number formats

(4) Fast approximate neural network functions, knowledge distillation, self-supervised compression using unlabeled data

(5) Speeding up model training, distributed learning and inference

(6) Leveraging physics-based simulation, protecting data privacy and model security

(7) Student presentations and course wrap-up discussion

上課模式:

實體上課為原則,如有遠距上課需求, 3/8(第一次)與3/29 (第七次)仍需實體出席。本課程含分組討論,由助教主持輔導時間並促進討論互動,並將提供上課教學投影片給學員。

流程:

18:30-19:30 講課

19:30-19:40 休息

19:40-20:05 分組討論互動

20:05-20:30 總結

※本課程原則上採實體上課,惟上課模式將依據衛福部疾管署發布之防疫警戒標準方針做滾動式調整。若課程須轉為線上進行,將使用 Zoom遠端教學。

授課老師:

孔祥重(HT Kung)是哈佛大學計算機科學與電機工程的 William H. Gates 講座教授,將於 2022 年春季回台親自授課。孔教授在其研究生涯中橫跨多項電機資訊理論與系統領域,在人工智慧加速器相關領域如機器學習加速器、VLSI 設計、 高性能計算、平行計算、計算機結構和網路皆有研究創見。孔教授的學術榮譽包括美國國家工程院院士、台灣中央研究院院士、古根漢 Fellow 和 ACM SIGOPS 2015 名人堂獎。孔院士於 2017 年起倡議成立台灣人工智慧學校,並自 2018 年起擔任校長至今。

報名方式:

本招生採網路報名,第一階段報名截止日 2022 年 2 月 25 日,請於當日晚間 23:59 前上網完成填寫報名資料 (線上報名:https://neti.cc/L2QLpea)。請完整填寫報名表,以便完成審核程序。第一階段錄取名單公告後,如仍有名額始開放第二階段報名。

錄取通知及註冊:

1.報名者於報名後將收到報名登記確認信。通過審核資格獲錄取者,將發送電子郵件至報名時所留的信箱,請點選信件中的連結網址回覆以完成報名及註冊程序。

2.產業從業人員獲錄取者,需於收到錄取通知後 3 天內完成註冊繳費。請於規定時間內辦理註冊及繳費,繳費方式可選擇線上金流(刷卡)或非線上金流(轉帳),若選擇非線上金流,系統會產生一組虛擬帳號,請務必在繳費期限內完成匯款繳費。繳費後才算完成報名程序。

3.未依規定辦理或逾期未註冊者,將取消錄取資格,事後不得以任何理由要求補註冊。

注意事項:

1.請務必於報名前詳閱本項招生簡章規定,避免日後因報名表單填寫不完整或資格不符影響錄取。

2.上網登錄報名資料之通訊地址、電話號碼及電子郵件地址請正確填寫,避免因無法即時收到通知喪失錄取資格。

【跨域應用AI技術 台大人工智慧中心展現前瞻研發新量能】

近年數位轉型與AI技術蓬勃發展,是各個產業關注的議題。而一個技術從研究開發到實際的產業應用,需要多方的合作與投入。協助跨領域技術與產業順利連結,是臺大AI中心的重點目標之一,為促成產學交流與合作,臺大AI中心於110年11月26日(五) 舉辦線上「臺大AI中心暨轄下計畫成果發表會」。此次由中心轄下AI核心技術及醫療照護領域的研究團隊,透過技術講演及海報展示,分兩個場次發表多項前瞻研究成果與應用方向。

在AI核心技術場次中,電腦視覺與多媒體類別研究團隊結合眾多技術,已生成相關落地應用於視訊監控與醫學影像、手機晶片與製造,與銀行及輿情分析產業等。其中,臺大陳祝嵩教授團隊所訓練的永續終身學習(CLL)模型應用在工業的瑕疵檢測上,能將所有的瑕疵辨認整合在同一模型進行,可達到增加新的偵測目標而不影響原本的辨識率。

此外,陳祝嵩團隊所開發的Audio visual語音增強技術,透過灰階化、降解析、自編碼等多重手法,讓影像處理成本降至與音訊處理相當,並確保流程中影像無法還原,但仍可保持優異效果,在影像資料分享的過程中同時兼顧效能及隱私。

清大林嘉文教授團隊利用深度學習研發出半導體製程EDA工具,可以早期預測光刻製程所產生的電路失真及光罩修正,可應用於IC 製程之佈局圖評估、IC 瑕疵、熱區預測,及光罩優化等。這是全世界第一套以電腦視覺準確預測光刻製程對晶圓線路所產生的失真之技術,大幅超前目前EDA設計工具,可望造成半導體製程EDA之典範轉移。

AI晶片、硬體設計與通訊類別研究團隊也開發出多項實用技術。元智大學方士豪教授研究團隊開發之毫米波雷達動態感知技術,可在有隱私疑慮的遠距居家照護機構場景,用於偵測跌倒事件發生、或是監測臥床者之生理指數,降低照護者之負擔。

目前離線聲控裝置不易達到大字彙的關鍵字語音辨識,方士豪團隊研發出個人化語音增強技術,可在離線狀態下消除語音訊號中的雜訊,提升關鍵字辨識率,可應用於家電及家庭照護等語音控制裝置。

上圖:方士豪團隊以「個人化語音強化系統」獲頒2019未來科技突破獎。

另外,如何將需要大量計算的AI技術在終端裝置實現,可高度平行化處理的通用繪圖處理器(GPGPU)是一個未來方向。成大陳中和教授團隊自2013年起規畫開發的CASLab GPU,目標是打造出第一顆國內自製的SIMT運算型GPU。

上圖:陳中和團隊以「符合OpenCL/TensorFlow API 規範的通用繪圖處理器」獲頒2020旺宏金矽獎。

透過優化的編譯流程,使軟體堆疊更能配合硬體的運作,大幅提升整體效能,且提供開源的開發執行環境。軟體層無論OpenCL Runtime、Compiler都是以C語言開發,可以搭配在Arm、 RISC-V等常見的CPU平台上運作。這項技術也已開始技轉多家廠商,快速為MCU升級AI能力。

在這個連網智慧服務的時代,人們已習慣使用網路服務,近年來產業界也大量在第一線使用AI智能客服。若要達到精準應對,大範圍的知識庫是不可或缺的。在自然語言與情緒運算類別中,中研院馬偉雲教授團隊開發的獨特知識表達模式,將原本的常識 (廣義知網E-HowNet) 附加知識 (維基百科的文本資料),擴大詞彙規模,打造一個百萬詞彙級別的中文知識圖譜。

透過加以組合或分解,用有限的概念表達無限的語意,使得機器可以更容易地進行邏輯推論。不僅可強化AI智能應用(如Chatbot) 對中文語意理解的能力,也可用於各種語意分析工具及中文或華語教學。已有多個產業單位接洽並導入應用。

機器學習、深度學習與資安隱私也是在人工智慧相關研究中的熱門關鍵字。現今有許多透過雲端使用的線上機器學習服務,但資料遭竊取事件頻傳,甚至有透過深度學習重建原圖進行的非法行為。由臺大吳沛遠教授團隊提出之生成對抗壓縮隱私網路,透過非線性技術處理圖片及影片,能夠保留動作識別所需特徵,但避免暴露影片中人物的身分。

該團隊也研發以多方安全計算技術應用於深度學習的圖像分類問題上,用以保護類神經網路,使外界無法得知透過哪些資料進行訓練。此技術適合應用於醫學影像、臉部辨識、虹膜辨識等機密檔案的相關工作。運用這些技術,一方面保有足夠資訊讓業者提供雲端服務,同時能維護使用者的隱私。

在醫療照護場次部分,有多項醫學影像的研究成果已實際導入醫療場域使用。如臺大張瑞峰教授團隊運用深度學習技術開發的全自動乳房超音波乳癌偵測與診斷系統,採用一次性檢查設計,1秒內即可完成一個全自動乳房超音波(300 張影像)的乳癌檢閱程序,較傳統方法大幅縮短閱片時間,並能精確定位乳癌位置及顯示區域並進行診斷,具有95%的正確度。

此外,乳癌診斷準確度亦達89.2%,已具有高度臨床價值。而臺大黃升龍教授團隊則透過結合深度卷積神經網路及三維細胞級斷層影像,可以即時分析活體細胞核的形貌及統計資訊、標註真皮表皮交界處、可將OCT影像轉換以模擬切片染色影像,協助病理診斷。由該團隊技轉所開發出之台灣原創高解析活體光學影像系統(ApolloVue S100),具有極高的三維解析度,可即時呈現人體皮膚之完整表皮層及上真皮層結構,並結合智能影像導引快速切換橫切面或縱切面影像模式,已獲美國FDA 二類醫療器材以及台灣TFDA第二級醫療器材認證。相關電腦輔助偵測/診斷系統可以提供即時診斷參考,進而降低人為疏失,協助醫師提供即時且更精準的診斷。

臺大AI中心與轄下31個團隊執行科技部AI創新專案已四年,不論在學術研究、國際合作,與產業應用面都繳出亮眼成績,並致力於連結學研界人才、技術與實際產業應用,促成跨領域、跨單位、跨國際的多元合作。若您考慮導入AI技術、進行數位轉型、開發AI應用,或取得學研AI技術授權、尋求學研團隊合作,可以聯繫臺大AI中心。歡迎到臺大AI中心官網、照護子中心官網,進一步了解團隊的研究成果。

👉詳細請見此連結

轉載自:https://www.cw.com.tw/article/5119119

「在機器學習中,如何取得資料是第一個難關。為了建立台灣中文對話的情緒演算模型,團隊在五年前先透過戲劇系演員協助,演繹人們對話時的各種情境,再結合行為科學家的研究理論,輔助建立模型。」清華大學電機工程系副教授李祈均說道。

需借力戲劇系演員,是因國外雖多有情緒演算研究,但受限於個資問題,即便研究單位願意分享模型成果,在無法結合原始資料分析下,應用價值有限。而要從真實世界裡從頭累積華文世界的資料庫,又曠日廢時,因此才想到這個方法。

即便如此,李祈均教授的團隊仍耗費四年時間,在累積100多個小時的對話數據庫後,並整併行為科學理論才得以建構出有效中文情緒辨識模型。

「這個難關,直到近兩年Podcast盛行,才讓研究團隊思考能以比較短時間內,大量取得更多元的對話情境數據。」李祈均指出,以往做情緒演算模型時,往往只有一、兩萬句對話,頂多十幾個小時的資料量,但在國外最新以Podcast為數據來源的研究中,則以至少400小時為目標。

李祈均分享,目前團隊也和該研究機構合作,取材中文的Podcast節目,並整合自動語音辨識、語意分析處理、多模態融合、個人化模型的演算法,同步整合個體空間行為表徵學習,透過深度網路構建情緒辨識模組。

清華大學電機工程系副教授李祈均

而除了情緒演算外,要建構中文的超級AI客服,仍有賴於表徵學習(Representation Learning)的核心技術。

「在AI客服的應用上,無論輸入的資料是文字、語音、圖像或影片,都需將數據轉換成能被機器學習的向量與實數,而表徵學習就是在機器學習使用表徵的同時,也學習如何提取特徵的技術。」清華大學資訊工程系教授吳尚鴻說道。

就因表徵學習具備「學習如何學習」的能力,除了取代過去需仰賴專業人員、耗費大量時間手動提取特徵,並「輸入」數據的時間,應用在「輸出」時,更有助於開發出原生文字、原生圖片、原生語音、原生影片,為線上消費環境提供良好的使用者體驗。

清華大學資訊工程系教授吳尚鴻

但要建構中文世界的超級AI客服,要攻克的最大關卡,仍是語言這座巴別塔。畢竟,機器學習霸權的母語屬拼音系統,並透過程式編碼樹立高牆,中文世界的研究要後來追上,看似不易。

不過,這在研究自然語言處理(Natural Language Processing , NLP,或稱Computational Linguistics,CL)三十多年的臺大資訊工程系教授陳信希眼中,卻不是一件難事。

「不管是拼音組成的英文單字,或是象形文字的中文單字,要對電腦產生意義,都需要轉化成有意義的形式,也就是所謂的表徵。」陳信希分享,語言在電腦中的表現方式,從早期的(symbolic representation)、中期的分佈式表徵(distributional representation),演進到近年來在深度學習中廣為使用的分散式表徵(distributed representation),都需先把生活中的事物化為表徵,再透過不同方式運算。

更何況,從單字到句子,無論是中文或英文,只要能從名詞、動詞、形容詞和副詞組成的句型中,判斷出人事時地物,就能化成自然語言學所需的資料。

「尤其,深度學習的分散式表徵,使用低維度高稠密向量,以預測方式取代過去分佈式表徵的計數方式,大大提升了機器學習任務的效能,也讓自然語言學有了大幅躍進。」陳信希說道。

臺大資訊工程系特聘教授陳信希

在深度學習技術的推進下,也讓未來的AI客服有了全新想像。

「目前,除了線上翻譯、聊天機器人外,自然語言學也已應用在輿情分析、病歷探勘、金融科技、健康照護、法律諮詢、烹飪教學等領域,在加入深度學習後,未來潛力可期。」陳信希說道。

當研究團隊運用深度學習,在取得資料進行數據運算時,也讓學研界自省在一項技術研究初期,思考該技術發展後可能衍生的問題與爭議,並加以改善、防堵。

「當人們享受Google、Facebook帶來的便利時,伴隨大數據演算而來的大量廣告推播,也讓民眾的被監控感日益強烈,並衍生出資訊自主等議題。」吳尚鴻指出,在消費者對資訊安全日益重視後,杜絕平台收集個人資料的軟體也會應運而生,例如只要在手機裡下載某個App,就可防止平台收集你的資料。

「當資訊安全性與自主權獲得保障後,人們才可以安心沉浸在多媒體建構的虛擬世界中,藝術創作者也才能安心創作,不怕被侵權。」李祈均說道。

從以自然語言學,將人類語言轉換成電腦的語言,並透過深度學習,催生出多元的AI客服應用。未來的超級AI客服,除了是打開元宇宙的鑰匙之一,相關技術衍生的資訊安全問題,更是人們往返實體與虛擬世界中,最重要的心理安全閥。

![]() 詳細文章請見

詳細文章請見

啟動元宇宙的鑰匙:超級AI客服 https://www.cw.com.tw/article/5119119

官方網站設計 , 設計品牌 , 台北品牌設計 , 台南品牌設計 , 品牌設計 , LOGO設計 , 台北LOGO設計 , 台南LOGO設計 , 名片設計 , 台北名片設計 , 台南名片設計 , CIS企業識別設計 , 設計LOGO , 台北設計LOGO , 台南設計LOGO , 視覺設計 , 網頁設計 , 台北網頁設計 , 台中網頁設計 , 台南網頁設計 , 高雄網頁設計 , 網站設計 , 台北網站設計 , 台中網站設計 , 台南網站設計 , 高雄網站設計 , 官網設計 , 台北官網設計 , 台中官網設計 , 台南官網設計 , 高雄官網設計 , 公司官網設計 , 形象官網設計 , 產品官網設計 , 響應式網頁設計 , 響應式網站設計 , 公司網頁設計 , 教會網站設計設計 , 設計品牌 , 台北品牌設計 , 台南品牌設計 , 品牌設計 , LOGO設計 , 台北LOGO設計 , 台南LOGO設計 , 名片設計 , 台北名片設計 , 台南名片設計 , CIS企業識別設計 , 設計LOGO , 台北設計LOGO , 台南設計LOGO , 視覺設計 , 網頁設計 , 台北網頁設計 , 台中網頁設計 , 台南網頁設計 , 高雄網頁設計 , 網站設計 , 台北網站設計 , 台中網站設計 , 台南網站設計 , 高雄網站設計 , 官網設計 , 台北官網設計 , 台中官網設計 , 台南官網設計 , 高雄官網設計 , 公司官網設計 , 形象官網設計 , 產品官網設計 , 響應式網頁設計 , 響應式網站設計 , 公司網頁設計 , 教會網站設計設計 , 室內精油 , 室內香氛 , 居家香氛 , 房間香氛 , 空間香氛 , 香氛蠟燭 , 香氛精油 , 居家香精 , 精油香氛 , 居家香氛擴香 , 香氛蠟燭推薦 , 房間香氛推薦 , 香氛品牌推薦 , 嚴選香氛 , 香氛推薦 , 精油推薦 , 室內香水 , 香水 , 精油 , 擴香 , 室內擴香 , 擴香蠟燭 , 香水蠟燭 , 婚攝 , 婚攝婚紗 , 自助婚紗 , 美式婚禮攝影師 , 婚攝美式 , 婚禮攝影 , 婚禮紀錄 , 婚攝推薦 , 美式婚攝 , 美式婚禮攝影 , 網站設計 , 網頁設計 , 響應式網頁設計 , SEO最佳化 , SEO搜尋引擎最佳化 , 血壓量測 , 腕式血壓計 , 全自動手臂式血壓計 , 心房顫動偵測 , 體溫計 , 耳溫槍 , 額溫槍 , 熱敷墊 , 電毯 , 中風 , 高血壓 , 血壓計 , 血壓計推薦 , 血壓計品牌 , 歐姆龍 , 百靈 , 台南親子寫真 , 台中親子寫真 , 台北親子寫真 , 高雄親子寫真 , 台南兒童寫真 , 台中兒童寫真 , 台北兒童寫真 , 高雄兒童寫真 , 台南親子寫真推薦 , 台中親子寫真推薦 , 高雄親子寫真推薦 , 台北親子寫真推薦 , 台南家庭寫真 , 台中家庭寫真 , 高雄家庭寫真 , 台北家庭寫真 , 台南全家福 , 台中全家福 , 高雄全家福 , 台北全家福 , Milk and Honey Studio 美式婚禮婚紗攝影團隊 , Milk and Honey Studio 美式婚攝 , 婚攝 , 婚禮攝影 , 婚紗攝影 , 婚攝推薦 , 美式婚禮攝影 , 美式婚紗攝影 , 自助婚紗 , 孕婦寫真 , 親子寫真 , 家庭寫真 , 美式風格婚紗攝影 , 美式風格婚禮攝影 , 台北美式婚禮攝影推薦 , 台中美式婚禮攝影推薦 , 美式婚攝推薦 , 美式婚禮婚紗攝影團隊 , 美式婚攝 , 美式婚攝第一品牌 , PTT美式風格婚攝 , 新秘 , 新娘秘書 , 新娘造型 , 高雄新秘推薦 , 台北新秘推薦 , 新秘Yuki , 白色夢幻新秘Yuki , 新娘秘書Yuki , 根尖手術 , 根管治療 , 牙齒再植手術 , 顯微根管 , 鈣化根管治療 , 再生性根管治療 , 牙根黑影與膿包治療 , 高雄根管治療 , 高雄牙齒醫師 , 高雄牙齒 , 高雄顯微根管醫師 , 美白牙齒 , 婚禮顧問 , 婚顧 , 婚禮企劃 , 婚禮主持 , 雙語婚禮主持 , 英文婚禮主持 , Wedding mc , Wedding Planner , Bilingual Wedding , Wedding mc in Taiwan , Wedding mc in Taipei , Wedding Planner in Taiwan , Wedding Planner in Taipei , Bilingual Wedding mc in Taiwan , Bilingual Wedding mc in Taipei , 婚禮顧問推薦 , 婚禮企劃推薦 , 禮儀公司 , 葬儀社 , 生命禮儀 , 禮儀服務 , 殯葬服務 , 殯葬禮儀 , 屏東禮儀公司 , 屏東禮儀社 , 屏東葬儀社 , 屏東生命禮儀 , 屏東禮儀服務 , 屏東殯葬服務 , 高雄禮儀公司 , 高雄葬儀社 , 高雄生命禮儀 , 高雄禮儀服務 , 高雄殯葬服務 , 中式禮儀服務 , 西式禮儀服務 , 寵物氧氣機 , PetO2 寵物氧氣機 , 寵物製氧機 , 寵物專用氧氣機 , 狗用氧氣機 , 貓用氧氣機 , 犬貓氧氣機 , 室內香氛 , 居家香氛 , 房間香氛 , 空間香氛 , 香氛蠟燭 , 香氛精油 , 居家香精 , 精油香氛 , 居家香氛擴香 , 香氛蠟燭推薦 , 房間香氛推薦 , 香氛品牌推薦 , 嚴選香氛 , 香氛推薦 , 精油推薦 , 精油 , 香氛 , 婚禮顧問 , 婚禮企劃 , 婚禮規劃 , 婚禮主持 , 抓周企劃 , 慶生派對企劃 , 尾牙春酒企劃 , 文定儀式規劃 , 迎娶儀式規劃 , 結婚儀式規劃 , 台北婚禮顧問 , 新北婚禮顧問 , IoT資安 , 303645 , RED Cybersecurity , 18031 , an ninh mạng , 木地板 , 窗簾 , 壁紙 , 台南室內設計推薦ptt , 台南室內裝潢公司 , 台南老屋翻新 , 台南統包推薦 , 台南老屋改建 , 台南室內設計推薦 , 台南室內設計推薦 , 室內設計推薦 , 室內設計 , 室內裝潢 , 現代簡約風設計 , 寵物保健 , 寵物關節 , 貓咪保健 , 狗狗保健 , 寵物腸胃 , 寵物皮膚保健

Titouan Parcollet is an associate professor in computer science at the Laboratoire Informatique d’Avignon (LIA), from Avignon University (FR) and a visiting scholar at the Cambridge Machine Learning Systems Lab from the University of Cambridge (UK). Previously, he was a senior research associate at the University of Oxford (UK) within the Oxford Machine Learning Systems group. He received his PhD in computer science from the University of Avignon (France) and in partnership with Orkis focusing on quaternion neural networks, automatic speech recognition, and representation learning. His current work involves efficient speech recognition, federated learning and self-supervised learning. He is also currently collaborating with the university of Montréal (Mila, QC, Canada) on the SpeechBrain project.

Mirco Ravanelli is currently a postdoc researcher at Mila (Université de Montréal) working under the supervision of Prof. Yoshua Bengio. His main research interests are deep learning, speech recognition, far-field speech recognition, cooperative learning, and self-supervised learning. He is the author or co-author of more than 50 papers on these research topics. He received his PhD (with cum laude distinction) from the University of Trento in December 2017. Mirco is an active member of the speech and machine learning communities. He is founder and leader of the SpeechBrain project.

Shinji Watanabe is an Associate Professor at Carnegie Mellon University, Pittsburgh, PA. He received his B.S., M.S., and Ph.D. (Dr. Eng.) degrees from Waseda University, Tokyo, Japan. He was a research scientist at NTT Communication Science Laboratories, Kyoto, Japan, from 2001 to 2011, a visiting scholar in Georgia institute of technology, Atlanta, GA in 2009, and a senior principal research scientist at Mitsubishi Electric Research Laboratories (MERL), Cambridge, MA USA from 2012 to 2017. Prior to the move to Carnegie Mellon University, he was an associate research professor at Johns Hopkins University, Baltimore, MD USA from 2017 to 2020. His research interests include automatic speech recognition, speech enhancement, spoken language understanding, and machine learning for speech and language processing. He has been published more than 200 papers in peer-reviewed journals and conferences and received several awards, including the best paper award from the IEEE ASRU in 2019. He served as an Associate Editor of the IEEE Transactions on Audio Speech and Language Processing. He was/has been a member of several technical committees, including the APSIPA Speech, Language, and Audio Technical Committee (SLA), IEEE Signal Processing Society Speech and Language Technical Committee (SLTC), and Machine Learning for Signal Processing Technical Committee (MLSP).

Karteek Alahari is a senior researcher (known as chargé de recherche in France, which is equivalent to a tenured associate professor) at Inria. He is based in the Thoth research team at the Inria Grenoble – Rhône-Alpes center. He was previously a postdoctoral fellow in the Inria WILLOW team at the Department of Computer Science in ENS (École Normale Supérieure), after completing his PhD in 2010 in the UK. His current research focuses on addressing the visual understanding problem in the context of large-scale datasets. In particular, he works on learning robust and effective visual representations, when only partially-supervised data is available. This includes frameworks such as incremental learning, weakly-supervised learning, adversarial training, etc. Dr. Alahari’s research has been funded by a Google research award, the French national research agency, and other industrial grants, including Facebook, NaverLabs Europe, Valeo.

Sijia Liu is currently an Assistant Professor at the Computer Science & Engineering Department of Michigan State University. He received the Ph.D. degree (with All-University Doctoral Prize) in Electrical and Computer Engineering from Syracuse University, NY, USA, in 2016. He was a Postdoctoral Research Fellow at the University of Michigan, Ann Arbor, in 2016-2017, and a Research Staff Member at the MIT-IBM Watson AI Lab in 2018-2020. His research spans the areas of machine learning, optimization, computer vision, signal processing and computational biology, with a focus on developing learning algorithms and theory for scalable and trustworthy artificial intelligence (AI). He received the Best Student Paper Award at the 42nd IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). His work has been published at top-tier AI conferences such as NeurIPS, ICML, ICLR, CVPR, ICCV, ECCV, AISTATS, and AAAI.

Soheil Feizi is an assistant professor in the Computer Science Department at University of Maryland, College Park. Before joining UMD, he was a post-doctoral research scholar at Stanford University. He received his Ph.D. from Massachusetts Institute of Technology (MIT). He has received the NSF CAREER award in 2020 and the Simons-Berkeley Research Fellowship on deep learning foundations in 2019. He is the 2020 recipient of the AWS Machine Learning Research award, and the 2019 recipients of the IBM faculty award as well as the Qualcomm faculty award. He is the recipient of teaching award in Fall 2018 and Spring 2019 in the CS department at UMD. His work has received the best paper award of IEEE Transactions on Network Science and Engineering, over a three-year period of 2017-2019. He received the Ernst Guillemin award for his M.Sc. thesis, as well as the Jacobs Presidential Fellowship and the EECS Great Educators Fellowship at MIT.

I am a Research Staff Member at IBM T. J. Watson Research Center.

Prior to this, I was a Postdoctoral Researcher at the Center for Theoretical Physics, MIT.

I received my Ph.D. in 2018 from Centrum Wiskune & Informatica and QuSoft, Amsterdam, Netherlands, supervised by Ronald de Wolf. Before that I finished my M.Math in Mathematics from University of Waterloo and Institute of Quantum computing, Canada in 2014, supervised by Michele Mosca.

Shang-Wen (Daniel) Li is an Engineering and Science Manager at Facebook AI. His research focuses on natural language and speech understanding, conversational AI, meta learning, and auto ML. He led a team at AWS AI on building conversation AI technology for call center analytics and chat bot authoring. He also worked at Amazon Alexa and Apple Siri for implementing their conversation assistants. He earned his PhD from MIT CSAIL with topics on natural language understanding and its application to online education. He co-organized the workshop of “Self-Supervised Learning for Speech and Audio Processing” at NeurIPS (2020) and the workshop of “Meta Learning and Its Applications to Natural Language Processing” at ACL (2021).

Thang Vu received his Diploma (2009) and PhD (2014) degrees in computer science from Karlsruhe Institute of Technology, Germany. From 2014 to 2015, he worked at Nuance Communications as a senior research scientist and at Ludwig-Maximilian University Munich as an acting professor in computational linguistics. In 2015, he was appointed assistant professor at University of Stuttgart, Germany. Since 2018, he has been a full professor at the Institute for Natural Language Processing in Stuttgart. His main research interests are natural language processing (esp. speech, natural language understanding and dialog systems) and machine learning (esp. deep learning) for low-resource settings.

Song Han is an assistant professor at MIT’s EECS. He received his PhD degree from Stanford University. His research focuses on efficient deep learning computing. He proposed “deep compression” technique that can reduce neural network size by an order of magnitude without losing accuracy, and the hardware implementation “efficient inference engine” that first exploited pruning and weight sparsity in deep learning accelerators. His team’s work on hardware-aware neural architecture search that bring deep learning to IoT devices was highlighted by MIT News, Wired, Qualcomm News, VentureBeat, IEEE Spectrum, integrated in PyTorch and AutoGluon, and received many low-power computer vision contest awards in flagship AI conferences (CVPR’19, ICCV’19 and NeurIPS’19). Song received Best Paper awards at ICLR’16 and FPGA’17, Amazon Machine Learning Research Award, SONY Faculty Award, Facebook Faculty Award, NVIDIA Academic Partnership Award. Song was named “35 Innovators Under 35” by MIT Technology Review for his contribution on “deep compression” technique that “lets powerful artificial intelligence (AI) programs run more efficiently on lowpower mobile devices.” Song received the NSF CAREER Award for “efficient algorithms and hardware for accelerated machine learning” and the IEEE “AIs 10 to Watch: The Future of AI” award.

Hung-yi Lee received the M.S. and Ph.D. degrees from National Taiwan University (NTU), Taipei, Taiwan, in 2010 and 2012, respectively. From September 2012 to August 2013, he was a postdoctoral fellow in Research Center for Information Technology Innovation, Academia Sinica. From September 2013 to July 2014, he was a visiting scientist at the Spoken Language Systems Group of MIT Computer Science and Artificial Intelligence Laboratory (CSAIL).

John Shawe-Taylor is professor of Computational Statistics and Machine Learning at University College London. He has helped to drive a fundamental rebirth in the field of machine learning, with applications in novel domains including computer vision, document classification, and applications in biology and medicine focussed on brain scan, immunity and proteome analysis. He has published over 250 papers and two books that have together attracted over 80000 citations.

He has also been instrumental in assembling a series of influential European Networks of Excellence. The scientific coordination of these projects has influenced a generation of researchers and promoted the widespread uptake of machine learning in both science and industry that we are currently witnessing.

He was appointed UNESCO Chair of Artificial Intelligence in November 2018 and is the leading trustee of the UK Charity, Knowledge 4 All Foundation, promoting open education and helping to establish a network of AI researchers and practitioners in sub-Saharan Africa. He is the Director of the International Research Center on Artificial Intelligence established under the Auspices of UNESCO in Ljubljana, Slovenia.

Been Kim is a staff research scientist at Google Brain. Her research focuses on improving interpretability in machine learning by building interpretability methods for already-trained models or building inherently interpretable models. She gave a talk at the G20 meeting in Argentina in 2019. Her work TCAV received UNESCO Netexplo award, was featured at Google I/O 19′ and in Brian Christian’s book on “The Alignment Problem”. Been has given keynote at ECML 2020, tutorials on interpretability at ICML, University of Toronto, CVPR and at Lawrence Berkeley National Laboratory. She was a co-workshop Chair ICLR 2019, and has been an area chair/senior area chair at conferences including NeurIPS, ICML, ICLR, and AISTATS. She received her PhD. from MIT.

Philipp is an Assistant Professor in the Department of Computer Science at the University of Texas at Austin. He received his PhD in 2014 from the CS Department at Stanford University and then spent two wonderful years as a PostDoc at UC Berkeley. His research interests lie in Computer Vision, Machine learning and Computer Graphics. He is particularly interested in deep learning, image, video, and scene understanding.

Cho-Jui Hsieh is an assistant professor in UCLA Computer Science Department. He obtained his Ph.D. from the University of Texas at Austin in 2015 (advisor: Inderjit S. Dhillon). His work mainly focuses on improving the efficiency and robustness of machine learning systems and he has contributed to several widely used machine learning packages. He is the recipient of NSF Career Award, Samsung AI Researcher of the Year, and Google Research Scholar Award. His work has been recognized by several best/outstanding paper awards in ICLR, KDD, ICDM, ICPP and SC.

Ming-Wei Chang is currently a Research Scientist at Google Research, working on machine learning and natural language processing problems. He is interested in developing fundamental techniques that can bring new insights to the field and enable new applications. He has published many papers on representation learning, question answering, entity linking and semantic parsing. Among them, BERT, a framework for pre-training deep bidirectional representations from unlabeled text, probably received the most attention. BERT achieved state-of-the-art results for 11 NLP tasks at the time of the publication. Recently he helped co-write the Deep learning for NLP chapter in the fourth edition of the Artificial Intelligence: A Modern Approach. His research has won many awards including 2019 NAACL best paper, 2015 ACL outstanding paper and 2019 ACL best paper candidate.

I received my Ph.D. in 11/2005 working at Ecole des Mines de Paris. Before that I graduated from the ENSAE with a master degree from ENS Cachan. I worked as a post-doctoral researcher at the Institute of Statistical Mathematics, Tokyo, between 11/2005 and 03/2007. Between 04/2007 and 09/2008 I worked in the financial industry. After working at the ORFE department of Princeton University between 02/2009 and 08/2010 as a lecturer, I was at the Graduate School of Informatics of Kyoto University between 09/2010 and 09/2016 as an associate professor (tenured in 11/2013). I have joined ENSAE in 09/2016. I now work there part-time, since 10/2018 when I have joined the Paris office of Google Brain, as a research scientist.

Arthur Gretton is a Professor with the Gatsby Computational Neuroscience Unit, and director of the Centre for Computational Statistics and Machine Learning (CSML) at UCL. He received degrees in Physics and Systems Engineering from the Australian National University, and a PhD with Microsoft Research and the Signal Processing and Communications Laboratory at the University of Cambridge. He previously worked at the MPI for Biological Cybernetics, and at the Machine Learning Department, Carnegie Mellon University.

Arthur’s recent research interests in machine learning include the design and training of generative models, both implicit (e.g. GANs) and explicit (exponential family and energy-based models), nonparametric hypothesis testing, survival analysis, causality, and kernel methods.

He has been an associate editor at IEEE Transactions on Pattern Analysis and Machine Intelligence from 2009 to 2013, an Action Editor for JMLR since April 2013, an Area Chair for NeurIPS in 2008 and 2009, a Senior Area Chair for NeurIPS in 2018 and 2021, an Area Chair for ICML in 2011 and 2012, a member of the COLT Program Committee in 2013, and a member of Royal Statistical Society Research Section Committee since January 2020. Arthur was program chair for AISTATS in 2016 (with Christian Robert), tutorials chair for ICML 2018 (with Ruslan Salakhutdinov), workshops chair for ICML 2019 (with Honglak Lee), program chair for the Dali workshop in 2019 (with Krikamol Muandet and Shakir Mohammed), and co-organsier of the Machine Learning Summer School 2019 in London (with Marc Deisenroth).

Prof. Hsuan-Tien Lin received a B.S. in Computer Science and Information Engineering from National Taiwan University in 2001, an M.S. and a Ph.D. in Computer Science from California Institute of Technology in 2005 and 2008, respectively. He joined the Department of Computer Science and Information Engineering at National Taiwan University as an assistant professor in 2008, and was promoted to an associate professor in 2012, and has been a professor since August 2017. Between 2016 and 2019, he worked as the Chief Data Scientist of Appier, a startup company that specializes in making AI easier in various domains, such as digital marketing and business intelligence. Currently, he keeps growing with Appier as its Chief Data Science Consultant.

From the university, Prof. Lin received the Distinguished Teaching Award in 2011, the Outstanding Mentoring Award in 2013, and the Outstanding Teaching Award in 2016, 2017 and 2018. He co-authored the introductory machine learning textbook Learning from Data and offered two popular Mandarin-teaching MOOCs Machine Learning Foundations and Machine Learning Techniques based on the textbook. His research interests include mathematical foundations of machine learning, studies on new learning problems, and improvements on learning algorithms. He received the 2012 K.-T. Li Young Researcher Award from the ACM Taipei Chapter, the 2013 D.-Y. Wu Memorial Award from National Science Council of Taiwan, and the 2017 Creative Young Scholar Award from Foundation for the Advancement of Outstanding Scholarship in Taiwan. He co-led the teams that won the third place of KDDCup 2009 slow track, the champion of KDDCup 2010, the double-champion of the two tracks in KDDCup 2011, the champion of track 2 in KDDCup 2012, and the double-champion of the two tracks in KDDCup 2013. He served as the Secretary General of Taiwanese Association for Artificial Intelligence between 2013 and 2014.

Lecture Title

Developing a World-Class AI Facial Recognition Solution – CyberLink FaceMe®

Lecture Abstract

CyberLink’s FaceMe® is a world-leading AI facial recognition solution. In this session, Davie Lee (R&D Vice President of CyberLink) will share the fundamentals of developing facial recognition solutions, such as the interface pipeline, and will share the key industrial use cases and trends of AI facial recognition.

Lecture Title

Transform the Beauty Industry through AI + AR: Perfect Corp’s Innovative Vision into the Digital Era

Lecture Abstract

Perfect Corp. is the world’s leading beauty tech solutions provider transforming the industry by marrying the highest level of augmented reality (AR) and artificial intelligence (AI) technology for a re-imagined consumer shopping experience.

Johnny Tseng (CTO of Perfect Corp.) will share the AI/AR beauty tech solutions and the roadmap with Perfect Corp.’s advance AI technology.

Dr. Shou-De Lin is Appier’s Chief Machine Learning (ML) Scientist since February 2020 with 20+ years of experience in AI,machine learning, data mining and natural language processing. Prior to joining Appier, he served as a full-time professor at the National Taiwan University (NTU) Department of Computer Science and Information Engineering. Dr. Lin is the recipient of several prestigious research awards and brings a mix of both academic and industry expertise to Appier. He has advised more than 50 global companies in the research and application of AI, winning awards from Microsoft, Google and IBM for his work. He led or co-led the NTU team to win 7 ACM KDD Cup championships. He has over 100 publications in top-tier journals and conferences, winning various dissertation awards. After joining Appier, Dr. Lin led the AiDeal team to win the Best Overall AI-based Analytics Solution in the 2020 Artificial Intelligence Breakthrough Awards. Dr. Lin holds a BS-EE degree t from NTU and an MS-EECS degree from the University of Michigan. He also holds an MS degree in Computational Linguistics and a Ph.D. in Computer Science, both from the University of Southern California.

Lecture Title

Machine Learning as a Services: Challenges and Opportunities

Lecture Abstract

Businesses today are dealing with huge amounts of data and the volume is growing faster than ever. At the same time, the competitive landscape is changing rapidly and it’s critical for commercial organizations to make decisions fast. Business success comes from making quick, accurate decisions using the best possible information.

Machine learning (ML) is a vital technology for companies seeking a competitive advantage, as it can process large volumes of data fast that can help businesses more effectively make recommendations to customers, hone manufacturing processes or anticipate changes to a market, for example.

Machine Learning as a Service (MLaaS) is defined in a business context as companies designing and implementing ML models that will provide a continuous and consistent service to customers. This is critical in areas where customer needs and behaviours change rapidly. For example, from 2020, people have changed how they shop, work and socialize as a direct result of the COVID-19 pandemic and businesses have had to shift how they service their customers to meet their needs.

This means that the technology they are using to gather and process data also needs to be flexible and adaptable to new data inputs, allowing businesses to move fast and make the best decisions.

One current challenge of taking ML models to MLaaS has to do with how we currently build ML models and how we teach future ML talent to do it. Most research and development of ML models focuses on building individual models that use a set of training data (with pre-assigned features and labels) to deliver the best performance in predicting the labels of another set of data (normally we call it testing data). However, if we’re looking at real-world businesses trying to meet the ever-evolving needs of real-life customers, the boundary between training and testing data becomes less clear. The testing or prediction data for today can be exploited as the training data to create a better model in the future.

Consequently, the data used for training a model will no doubt be imperfect for several reasons. Besides the fact that real-world data sources can be incomplete or unstructured (such as open answer customer questionnaires), they can come from a biased collection process. For instance, the data to be used for training a recommendation model are normally collected from the feedbacks of another recommender system currently serving online. Thus, the data collected are biased by the online serving model.

Additionally, sometimes the true outcome we really care about is usually the hardest to evaluate. Let’s take digital marketing for ecommerce as an example. The most

Dr. Shou-De Lin is Appier’s Chief Machine Learning (ML) Scientist since February 2020 with 20+ years of experience in AI,machine learning, data mining and natural language processing. Prior to joining Appier, he served as a full-time professor at the National Taiwan University (NTU) Department of Computer Science and Information Engineering. Dr. Lin is the recipient of several prestigious research awards and brings a mix of both academic and industry expertise to Appier. He has advised more than 50 global companies in the research and application of AI, winning awards from Microsoft, Google and IBM for his work. He led or co-led the NTU team to win 7 ACM KDD Cup championships. He has over 100 publications in top-tier journals and conferences, winning various dissertation awards. After joining Appier, Dr. Lin led the AiDeal team to win the Best Overall AI-based Analytics Solution in the 2020 Artificial Intelligence Breakthrough Awards. Dr. Lin holds a BS-EE degree t from NTU and an MS-EECS degree from the University of Michigan. He also holds an MS degree in Computational Linguistics and a Ph.D. in Computer Science, both from the University of Southern California.

As the Managing Director, Jason Ma oversees Google Taiwan’s site growth, business management and development, as well as leads multiple R&D projects across the board. Before taking this leadership role at Google Taiwan, Jason was a Platform Technology and Cloud Computing expert in the Platform & Ecosystem business group at Google Mountain View, CA. In his 10 years with Google, Jason has successfully led strategic partnerships with global hardware and software manufacturers and major chip providers to drive various innovations in cloud technology. These efforts have not only contributed to a substantial increase in Chromebook’s share in global education, consumer and enterprise markets, but have also attracted global talents to join Google and its partners in furthering the development of hardware and software technology solutions/services.

Prior to joining Google, Jason served on the Office group at Microsoft Redmond, WA. He represented the company in a project, involving Merck, Dell, Boeing, and the United States Department of Defense, to achieve solutions in unified communications and integrated voice technology. In 2007, Jason was appointed Director of the Microsoft Technology Center in Taiwan. During which time, Jason led the Microsoft Taiwan technology team and worked with Intel and HP to establish a Solution Center in Taiwan to promote Microsoft public cloud, data center, and private cloud technologies, connecting Taiwan’s cloud computing industry with the global market and supply chain.

Before joining Microsoft, Jason was Vice President and Chief Technology Officer at Soma.com. At Soma.com, Jason led the team in designing and launching e-commerce services, and partnered with Merck and WebMD on health consultation services and over the counter/prescription drugs/services. Soma.com was in turn acquired by CVS, the second largest pharmacy chain in the United States, forming CVS.com, where Jason served as Vice President and Chief Technology Officer and provided solutions for digital integration.

Jason graduated from the Department of Electrical Engineering at National Cheng Kung University, subsequent which he moved to the United States to further his graduate studies. In 1993, Jason obtained a Ph.D. in Electrical Engineering from the University of Washington, with a focus in the integration and innovation of power systems and AI Expert Systems. In 1997, Jason joined the National Sun Yat-sen University as an Associate Professor of Electrical Engineering. To date, Jason has published 22 research papers and co-authored 2 books. Due to his outstanding performance, Jason was nominated and listed in Who’s Who in the World in 1998.

Kai-Wei Chang is an assistant professor in the Department of Computer Science at the University of California Los Angeles (UCLA). His research interests include designing robust machine learning methods for large and complex data and building fair, reliable, and accountable language processing technologies for social good applications. Dr. Chang has published broadly in natural language processing, machine learning, and artificial intelligence. His research has been covered by news media such as Wires, NPR, and MIT Tech Review. His awards include the Sloan Research Fellowship (2021),

the EMNLP Best Long Paper Award (2017), the KDD Best Paper Award (2010), and the Okawa Research Grant Award (2018). Dr. Chang obtained his Ph.D. from the University of Illinois at Urbana-Champaign in 2015 and was a post-doctoral researcher at Microsoft Research in 2016.

Additional information is available at http://kwchang.net

Short version:

Dr. Pin-Yu Chen is a research staff member at IBM Thomas J. Watson Research Center, Yorktown Heights, NY, USA. He is also the chief scientist of RPI-IBM AI Research Collaboration and PI of ongoing MIT-IBM Watson AI Lab projects. Dr. Chen received his Ph.D. degree in electrical engineering and computer science from the University of Michigan, Ann Arbor, USA, in 2016. Dr. Chen’s recent research focuses on adversarial machine learning and robustness of neural networks. His long-term research vision is building trustworthy machine learning systems. At IBM Research, he received the honor of IBM Master Inventor and several research accomplishment awards. His research works contribute to IBM open-source libraries including Adversarial Robustness Toolbox (ART 360) and AI Explainability 360 (AIX 360). He has published more than 40 papers related to trustworthy machine learning at major AI and machine learning conferences, given tutorials at IJCAI’21, CVPR(’20,’21), ECCV’20, ICASSP’20, KDD’19, and Big Data’18, and organized several workshops for adversarial machine learning. He received a NeurIPS 2017 Best Reviewer Award, and was also the recipient of the IEEE GLOBECOM 2010 GOLD Best Paper Award.

Full version:

Dr. Pin-Yu Chen is currently a research staff member at IBM Thomas J. Watson Research Center, Yorktown Heights, NY, USA. He is also the chief scientist of RPI-IBM AI Research Collaboration and PI of ongoing MIT-IBM Watson AI Lab projects. Dr. Chen received his Ph.D. degree in electrical engineering and computer science and M.A. degree in Statistics from the University of Michigan, Ann Arbor, USA, in 2016. He received his M.S. degree in communication engineering from National Taiwan University, Taiwan, in 2011 and B.S. degree in electrical engineering and computer science (undergraduate honors program) from National Chiao Tung University, Taiwan, in 2009.

Dr. Chen’s recent research focuses on adversarial machine learning and robustness of neural networks. His long-term research vision is building trustworthy machine learning systems. He has published more than 40 papers related to trustworthy machine learning at major AI and machine learning conferences, given tutorials at IJCAI’21, CVPR(’20,’21), ECCV’20, ICASSP’20, KDD’19, and Big Data’18, and organized several workshops for adversarial machine learning. His research interest also includes graph and network data analytics and their applications to data mining, machine learning, signal processing, and cyber security. He was the recipient of the Chia-Lun Lo Fellowship from the University of Michigan Ann Arbor. He received a NeurIPS 2017 Best Reviewer Award, and was also the recipient of the IEEE GLOBECOM 2010 GOLD Best Paper Award. Dr. Chen is currently on the editorial board of PLOS ONE.

At IBM Research, Dr. Chen has co-invented more than 30 U.S. patents and received the honor of IBM Master Inventor. In 2021, he received an IBM Corporate Technical Award. In 2020, he received an IBM Research special division award for research related to COVID-19. In 2019, he received two Outstanding Research Accomplishments on research in adversarial robustness and trusted AI, and one Research Accomplishment on research in graph learning and analysis.

Chun-Yi Lee is an Associate Professor of Computer Science at National Tsing Hua University (NTHU), Hsinchu, Taiwan, and is the supervisor of Elsa Lab. He received the B.S. and M.S. degrees from National Taiwan University, Taipei, Taiwan, in 2003 and 2005, respectively, and the M.A. and Ph.D. degrees from Princeton University, Princeton, NJ, USA, in 2009 and 2013, respectively, all in Electrical Engineering. He joined NTHU as an Assistant Professor at the Department of Computer Science since 2015. Before joining NTHU, he was a senior engineer at Oracle America, Inc., Santa Clara, CA, USA from 2012 to 2015.

Prof. Lee’s research focuses on deep reinforcement learning (DRL), intelligent robotics, computer vision (CV), and parallel computing systems. He has contributed to the discovery and development of key deep learning methodologies for intelligent robotics, such as virtual-to-real training and transferring techniques for robotic policies, real-time acceleration techniques for performing semantic image segmentation, efficient and effective exploration approaches for DRL agents, as well as autonomous navigation strategies. He has published a number of research papers on major artificial intelligence (AI) conferences including NeurIPS, CVPR, IJCAI, AAMAS, ICLR, ICML, ECCV, CoRL,, ICRA IROS, GTC, and more. He has also published several research papers at IEEE Transaction on Very Large Scale Integration Systems (TVLSI) and Design Automation Conference (DAC). He founded Elsa Lab at National Tsing Hua University in 2015, and have led the members from Elsa Lab to win several prestigious awards from a number of worldwide robotics and AI challenges, such as the first place at NVIDIA Embedded Intelligent Robotics Challenge in 2016, the first place of the world at NVIDIA Jetson Robotics Challenge in 2018, the second place from the Person-In-Context (PIC) Challenge at the European Conference on Computer Vision (ECCV) in 2018, and the second place of the world from NVIDIA AI at the Edge Challenge in 2020. Prof. Lee is the recipient of the Ta-You Wu Memorial Award from the Ministry of Science and Technology (MOST) in 2020, which is the most prestigious award in recognition of outstanding achievements in intelligence computing for young researchers.

He has also received several outstanding research awards, distinguished teaching awards, and contribution awards from multiple institutions, such as NVIDIA Deep Learning institute (DLI) The Foundation for the Advancement of Outstanding Scholarship (FAOS), The Chinese Institute of Electrical Engineering (CIEE), Taiwan Semiconductor Industry Association (TSIA), and National Tsing Hua University (NTHU). In addition, he has served as the committee members and reviewers at many international and domestic conferences. His researches are especially impactful for autonomous systems, decision making systems, game engines, and vision-AI based robotic applications.

Prof. Lee is a member of IEEE and ACM. He has served as session chairs and technical program committee several times at ASP-DAC, NoCs, and ISVLSI. He has also served as the paper reviewer of NeurIPS, AAAI, IROS, ICCV, IEEE TPAMI, TVLSI, IEEE TCAD, IEEE ISSCC, and IEEE ASP-DAC. He has been the main organizer of the 3rd, 4th, and 5th Augmented Intelligence and Interaction (AII) Workshops from 2019-2021. He was the co-director of MOST Office for International AI Research Collaboration from 2018-2020.

Henry Kautz is serving as Division Director for Information & Intelligent Systems (IIS) at the National Science Foundation where he leads the National AI Research Institutes program. He is a Professor in the Department of Computer Science and was the founding director of the Goergen Institute for Data Science at the University of Rochester. He has been a researcher at AT&T Bell Labs in Murray Hill, NJ, and a full professor at the University of Washington, Seattle. In 2010, he was elected President of the Association for Advancement of Artificial Intelligence (AAAI), and in 2016 was elected Chair of the American Association for the Advancement of Science (AAAS) Section on Information, Computing, and Communication. His interdisciplinary research includes practical algorithms for solving worst-case intractable problems in logical and probabilistic reasoning; models for inferring human behavior from sensor data; pervasive healthcare applications of AI; and social media analytics. In 1989 he received the IJCAI Computers & Thought Award, which recognizes outstanding young scientists in artificial intelligence, and 30 years later received the 2018 ACM-AAAI Allen Newell Award for career contributions that have breadth within computer science and that bridge computer science and other disciplines. At the 2020 AAAI Conference he received both the Distinguished Service Award and the Robert S. Engelmore Memorial Lecture Award.

Lecture Abstract

Each AI summer, times of enthusiasm for the potential of artificial intelligence, has led to enduring scientific insights. Today’s third summer is different because it might not be followed by a winter, and it enables powerful applications for good and bad. The next steps in AI research are tighter symbolic-neuro integration

Lecture Outline

Jason Lee received his Ph.D. at Stanford University, advised by Trevor Hastie and Jonathan Taylor, in 2015. Before joining Princeton, he was a postdoctoral scholar at UC Berkeley with Michael I. Jordan. His research interests are in machine learning, optimization, and statistics. Lately, he has worked on the foundations of deep learning, non-convex optimization, and reinforcement learning.

Csaba Szepesvari is a Canada CIFAR AI Chair, the team-lead for the “Foundations” team at DeepMind and a Professor of Computing Science at the University of Alberta. He earned his PhD in 1999 from Jozsef Attila University, in Szeged, Hungary. In addition to publishing at journals and conferences, he has (co-)authored three books. Currently, he serves as the action editor of the Journal of Machine Learning Research and Machine Learning and as an associate editor of the Mathematics of Operations Research journal, while also regularly serves in various senior positions on program committees of various machine learning and AI conferences. Dr. Szepesvari’s main interest is to develop new, principled, learning-based approaches to artificial intelligence (AI), as well as to study the limits of such approaches. He is the co-inventor of UCT, a Monte-Carlo tree search algorithm, which inspired much work in AI.

Dr. Yuh-Jye Lee received the PhD degree in Computer Science from the University of Wisconsin-Madison in 2001. Now, he is a professor of Department of Applied Mathematics at National Chiao-Tung University. He also serves as a SIG Chair at the NTU IoX Center. His research is primarily rooted in optimization theory and spans a range of areas including network and information security, machine learning, data mining, big data, numerical optimization and operations research. During the last decade, Dr. Lee has developed many learning algorithms in supervised learning, semi-supervised learning and unsupervised learning as well as linear/nonlinear dimension reduction. His recent major research is applying machine learning to information security problems such as network intrusion detection, anomaly detection, malicious URLs detection and legitimate user identification. Currently, he focus on online learning algorithms for dealing with large scale datasets, stream data mining and behavior based anomaly detection for the needs of big data and IoT security problems.

請提供以下資料,完成後中心會將檔案連結寄至您的信箱。

(*為必填欄位)